Introduction

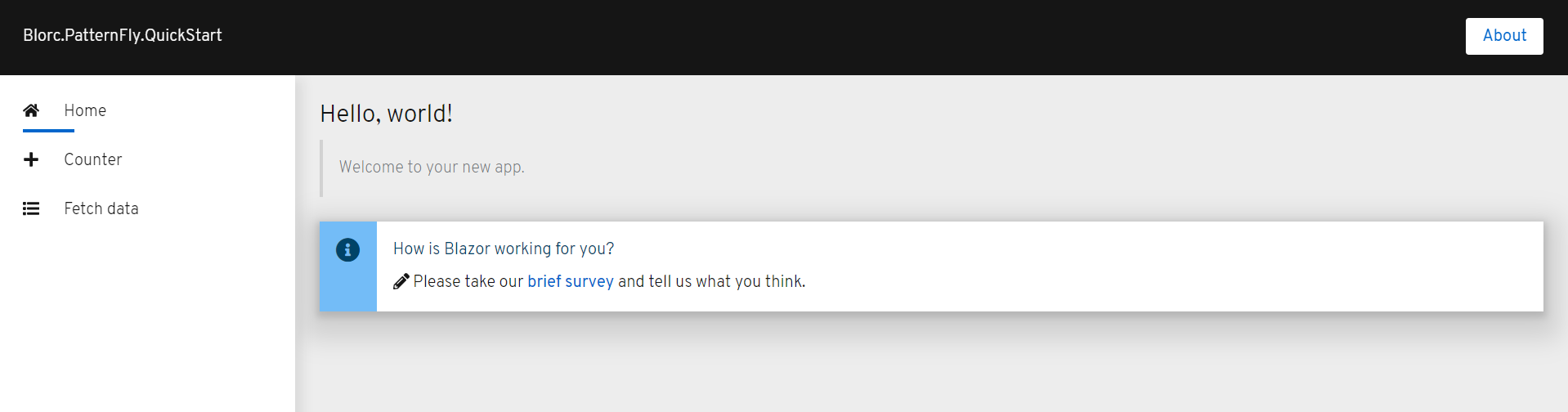

A long time ago, I wrote this post Why should you start using

NDepend? which I consider as the best post I have ever written or almost ;)

NDepend is a static analysis tool for .NET, and helps us to analyze code without executing it, and is generally used to ensure conformance with the coding guidelines. As its authors use to say,

it will likely find hundreds or even thousands of issues affecting your codebase.

After the first pre-releases of StoneAssemblies.MassAuth

I decided to X-ray it with NDepend. I attach a new NDepend

project to StoneAssemblies.MassAuth solution, filter out the test and demo assemblies, and hit the analyze button.

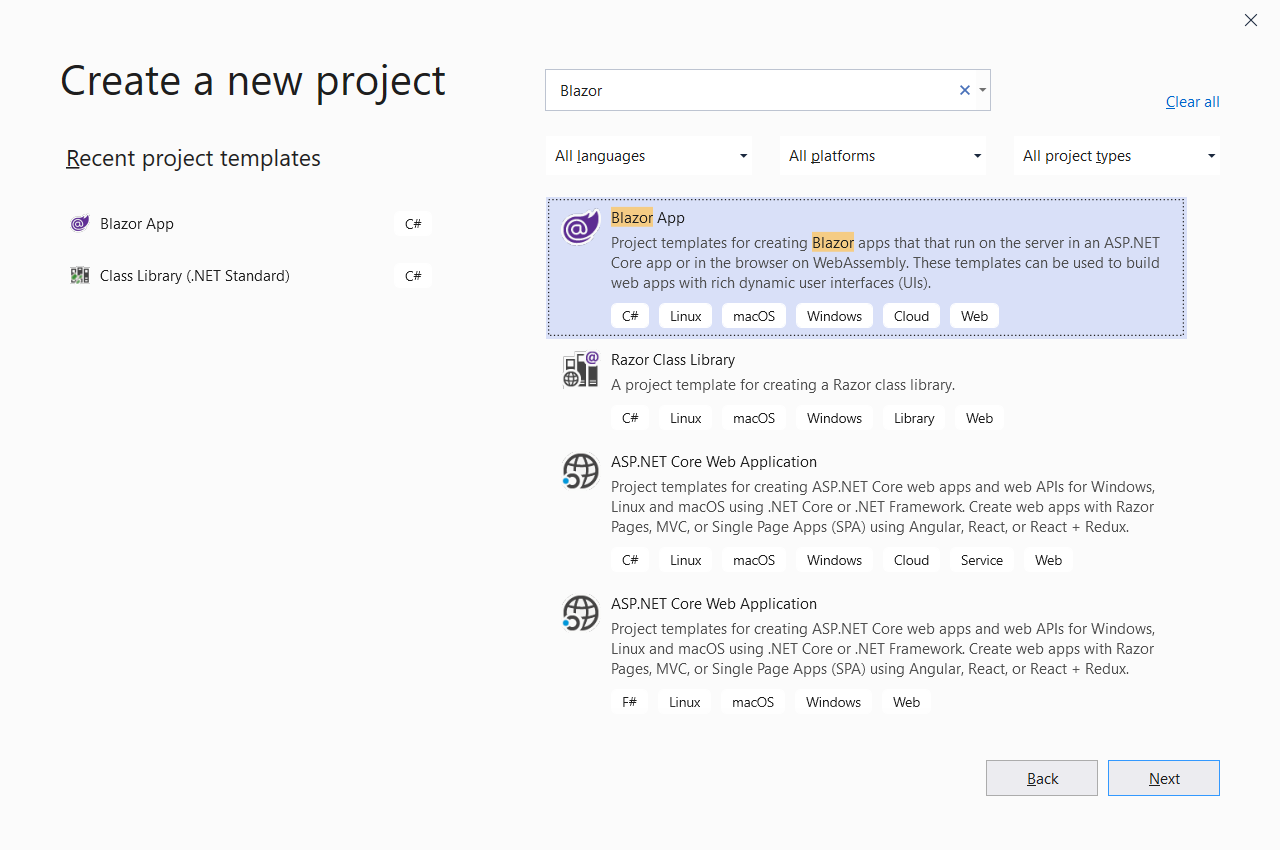

|

| Attach new NDepend Project to Visual Studio

Solution |

Now, I invite you to interpret some results with me. So, let's start.

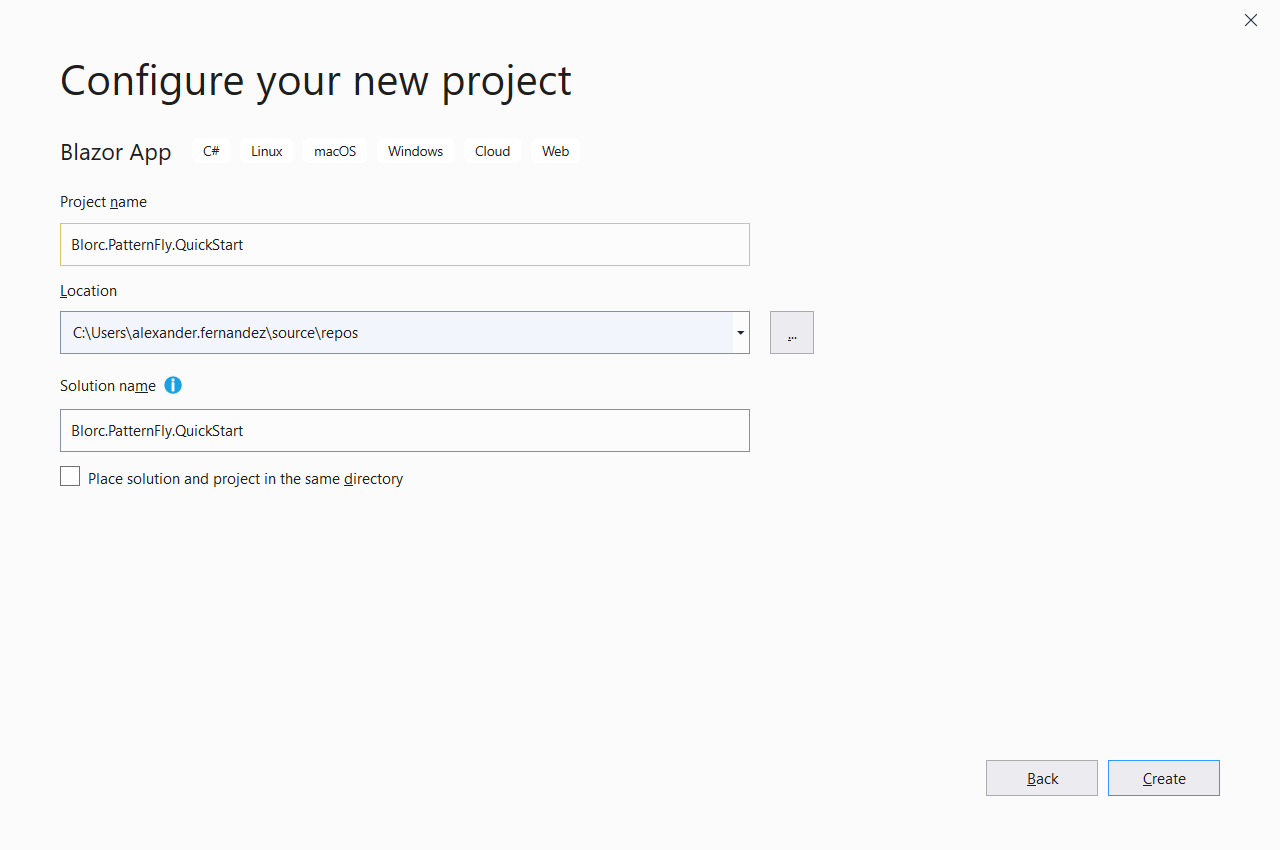

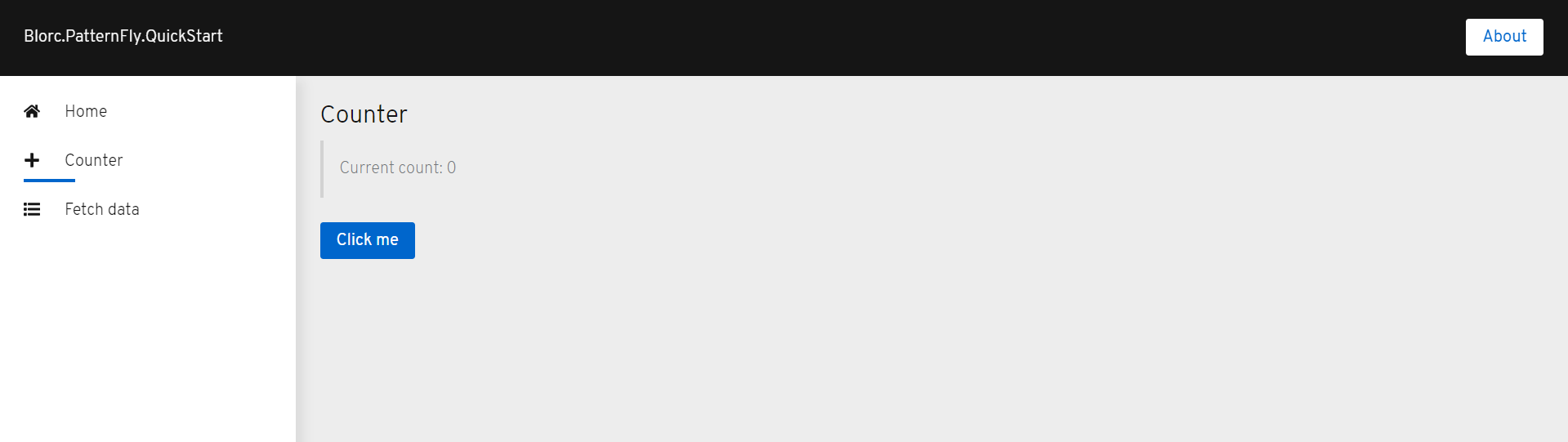

Interpreting the NDepend analysis report

One of the outputs of the analysis is a web report. The main page includes a report

summary with Diagrams, Application Metrics, Quality Gates summary, and Rules summary sections.

|

| NDepend Report Summary |

|

| Navigation Menu |

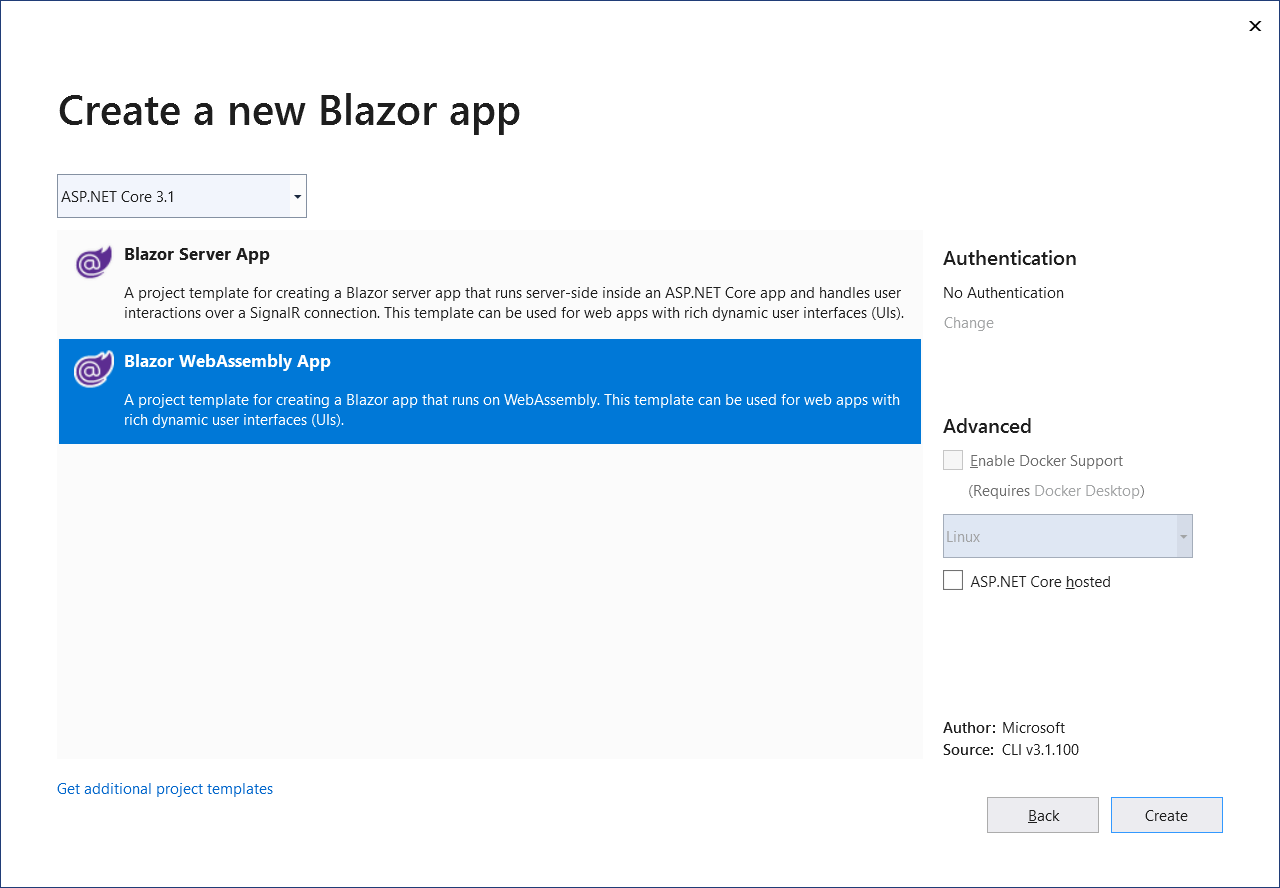

It also includes a navigation menu to drill through more detailed

information including Quality Gates, Rules, Trend Charts, Metrics, Dependencies, Hot Sports, Object-Oriented

Design, API Breaking Changes, Code Coverage, Dead Code, Code Diff Summary, Build Order, Abstractness vs.

Instability and Analysis Log.

But let's take a look at these summary sections.

Diagrams

The diagrams section includes Dependency Graph, Dependency Matrix, Treemap

Metric View and Abstractness vs. Instability.

|

| Dependency Graph |

|

| Dependency Matrix |

|

| Treemap Metric View (Color Metric

Coverage)

|

So far I understand these metrics, actually, I can interpret

them relatively easily.

But, wait for a

second. What is this Abstractness versus Instability?

|

| Abstractness

versus Instability |

According to the documentation the Abstractness versus Instability diagram helps to detect which assemblies are potentially painful to maintain (i.e concrete and stable) and

which assemblies are potentially useless (i.e abstract and instable).

Abstractness: If an assembly contains many abstract types (i.e

interfaces and abstract classes) and few concrete types, it is considered as abstract.

Instability: An assembly is considered stable if its types are used by a lot of types from other assemblies. In this context stable means painful to modify.

|

Component |

Abstractness (A) |

Instability (I) |

Distance (D) |

|

StoneAssemblies.MassAuth |

0 |

0.96 |

0.03 |

|

StoneAssemblies.MassAuth.Rules |

1 |

0.4 |

0.28 |

|

StoneAssemblies.MassAuth.Messages |

0.43 |

0.62 |

0.04 |

|

StoneAssemblies.MassAuth.Hosting |

0.14 |

0.99 |

0.09 |

|

StoneAssemblies.MassAuth.Rules.SqlClient |

0.17 |

1 |

0.12 |

|

StoneAssemblies.MassAuth.Server |

0 |

1 |

0 |

|

StoneAssemblies.MassAuth.Proxy |

0 |

1 |

0 |

None of StoneAssemblies.MassAuth's components seem potentially painful to maintain or potentially useless. But, I

have to keep my eyes on StoneAssemblies.MassAuth.Rules, because of the distance (D) from the main sequence. Actually, another candidate to review is StoneAssemblies.MassAuth.Messages since ideal assemblies are either completely abstract and stable (I=0, A=1) or completely concrete and instable (I=1, A=0).

Application Metrics

The application metrics section shows the following summary.

|

| Application Metrics |

Looks like some metrics depend on a codebase. Since this is the first I run NDepend's analysis over StoneAssemblies.MassAuth code, it hasn't noticed any difference with previous executions. But this

help me to be alert about the low 66.99% value for the tests coverage, the 3 failures for quality gates, the

violations of 2 critical rules and 15 high issues.

Quality Gates

In this section is possible to view more details on the failing quality gates. The percentage of coverage, the

critical rules violated and the debt rating per namespace.

|

| Quality Gates |

Wait for a second again. What is debt rating per

namespace means?

According to the documentation the rule is about to forbid namespaces with a poor debt rating. By default, a value greater than 20% is considered a poor debt

rating.

|

Namespaces |

Debt Ratio |

Issues |

|

StoneAssemblies.MassAuth.Services |

39.01 |

8 |

|

StoneAssemblies.MassAuth.Services.Attributes |

37.62 |

3 |

|

StoneAssemblies.MassAuth.Server |

23.67 |

6 |

|

StoneAssemblies.MassAuth.Rules.SqlClient .Rules |

36.24 |

3 |

Rules summary

The final section is the Rules summary. It listed the issues per rules in the following table.

|

| Rules summary |

NDepend indicates to me that I violated 2 critical rules. One to avoid namespaces mutually dependent and the other to avoid having different types with the same name. That sounds weird, but who knows, even I can make mistakes ;)

What's next?

I the near future, I will be integrating the NDepend analysis as part of the build process, therefore I could easily share with you the evolution of this library in terms of quality. If you are interested in such an experience wait for the next post.

As you already know,

StoneAssemblies.MassAuth is a work in progress, which includes some unresolved technical debts. But, as I told you once, it is possible to make mistakes

(critical or not), but be aware of your code quality constantly makes the difference between the apparent and intrinsic quality of your sources. If you are a dotnet developer, NDepend is a great tool to be aware of your code quality.

Yeah, you are right, I have some work to do here in order to fix this as soon as possible but also remember, StoneAssemblies.MassAuth is also an open-source project, so

you are welcome to contribute ;)